CLUTCH: Contextualized Language Model for Unlocking Text-Conditioned Hand Motion Modelling in the Wild

CLUTCH combines language grounding with motion diffusion to synthesize and caption in-the-wild hand motions from textual prompts.

Hi! I’m Balamurugan Thambiraja, presently pursuing a PhD at the

Neural Capture and Synthesis group

of Prof. Justus Thies

at the Max-Planck Institute for Intelligent Systems, Germany. During my Ph.D., I'm collobrating with

Darren Cosker

and Sadegh Aliakbarian

from Mesh Labs, Microsoft, Cambrdige.

In my Ph.D., I am exploring ways to leverage seq2seq and foundational models for synthesizing human motion and its underlying dynamics. Recently, I have been working with diffusion models and large language models for motion synthesis and editing, aiming to enhance the realism, controllability and generalization to in-the-wild scenarios.

Before the PhD, I did a Masters in Informatics at TUM, Germany, where I worked on human modelling and

sign language synthesis

at the

Visual Computing and Artificial Intelligence group

of Prof. Matthias Niessner. Before that, I did my Bachelors in Electrical and Electronics Engineering at Kumaraguru College of Technology, Coimbatore, India.

CLUTCH combines language grounding with motion diffusion to synthesize and caption in-the-wild hand motions from textual prompts.

3DiFACE is a diffusion-based method for synthesizing and editing holistic 3D facial animation from audio, enabling diverse generations and seamless edits.

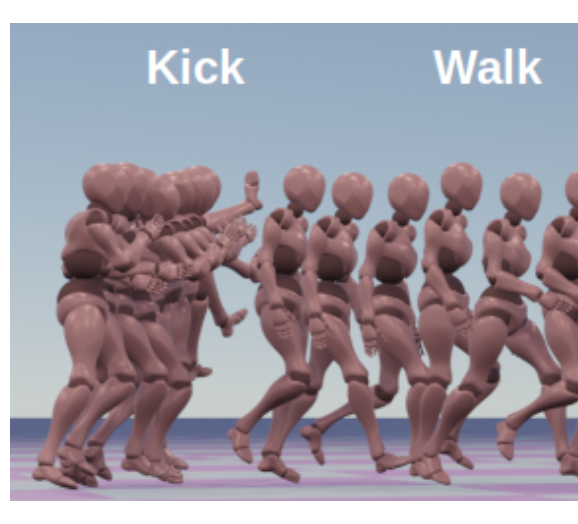

COMAND is a controllable multi-action 3D motion synthesis approach that removes the need for action-labeled data while keeping actions coherent.

Imitator generates person-specific speech-driven 3D facial animation with accurate lip closures using a short reference clip to build a style embedding.

A transformer-based pipeline that synthesizes sign pose sequences from text, achieving state-of-the-art results on the RWTH-PHOENIX 2014T benchmark.

Working on generative AI model for motion.

Worked on the online real-time virtual try-on system. Designed and developed a novel FLOW-based virtual try-on method.

Developed image processing and computer vision algorithms in CUDA for neutron imaging.

Worked on real-time head pose and eye gaze estimation for driver awareness monitoring system. Contributed to development of the eye gaze tracking solution that can run real-time on edge-computing devices.

At Wirecard, I worked on prepaid card application platform called NARADA and CORECARD, where I mainly worked on automating manual tasks using Python, VB Scripts, RUNDECK and Shell Scripts.